IBM launches cognitive computing cloud platform.

IBM is taking Watson and cognitive computing into the mainstream

The Watson Ecosystem empowers development of “Powered by IBM Watson” applications. Partners are building a community of organizations who share a vision for shaping the future of their industry through the power of cognitive computing. IBM’s cognitive computing cloud platform will help drive innovation and creative solutions to some of life’s most challenging problems. The ecosystem combines business partners’ experience, offerings, domain knowledge and presence with IBM’s technology, tools, brand, and marketing.

IBM offers a single source for developers to conceive and produce their Powered by Watson applications:

- Watson Developer Cloud — will offer the technology, tools and APIs to ISVs for self-service training, development, and testing of their cognitive application. The Developer Cloud is expected to help jump-start and accelerate creation of Powered by IBM Watson applications.

- Content Store — will bring together unique and varying sources of data, including general knowledge, industry specific content, and subject matter expertise to inform, educate, and help create an actionable experience for the user. The store is intended to be a clearinghouse of information presenting a unique opportunity for content providers to engage a new channel and bring their data to life in a whole new way.

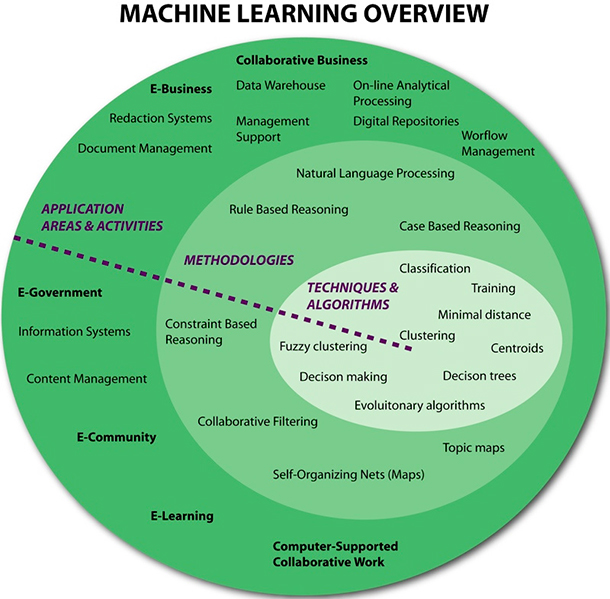

- Network — Staffing and talent organizations with access to in-demand skills like linguistics, natural language processing, machine learning, user experience design, and analytics will help bridge any skill gaps to facilitate the delivery of cognitive applications. .These talent hubs and their respective agents are expected to work directly with members of the Ecosystem on a fee or project basis.

How does cognitive computing differ from earlier artificial intelligence (AI)?

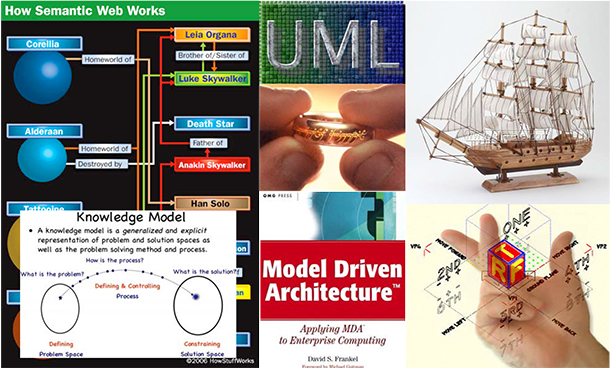

Cognitive computing systems learn and interact naturally with people to extend what either humans or machine could do on their own. In traditional AI, humans are not part of the equation. In cognitive computing, humans and machines work together. Rather than being programmed to anticipate every possible answer or action needed to perform a function or set of tasks, cognitive computing systems are trained using artificial intelligence (AI) and machine learning algorithms to sense, predict, infer and, in some ways, think.

Cognitive computing systems get better over time as they build knowledge and learn a domain – its language and terminology, its processes and its preferred methods of interacting. Unlike expert systems of the past which required rules to be hard coded into a system by a human expert, cognitive computers can process natural language and unstructured data and learn by experience, much in the same way humans do. While they’ll have deep domain expertise, instead of replacing human experts, cognitive computers will act as a decision support system and help them make better decisions based on the best available data, whether in healthcare, finance or customer service.

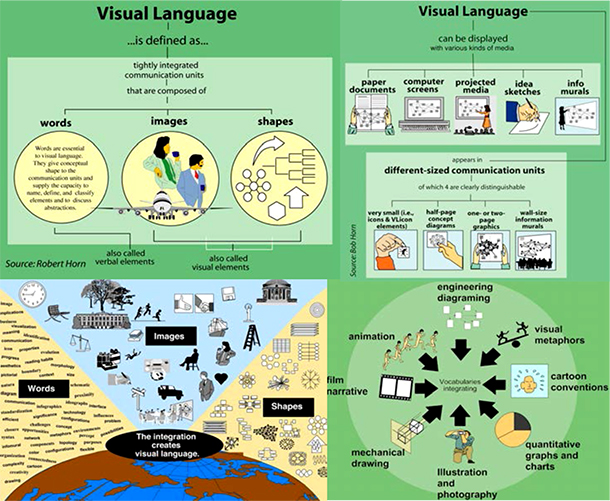

Smart solutions demand strong design think

IBM unveils new Design Studio to transform the way we interact with software and emerging technologies

The era of smart systems and cognitive computing is upon us. IBM’s product design studio in Austin, Texas will focus on how a new era of software will be designed, developed and consumed by organizations around the globe.

In addition to actively educating existing IBM team leads from engineering, design, and product management on new approaches to design, IBM is recruiting design experts and is engaging with leading design schools across the country to bring designers on board, including the d.school: Institute of Design at Stanford University, Rhode Island School of Design, Carnegie Mellon University, North Carolina State University, and Savannah College of Art & Design. Leading skill sets at the IBM Design Studio include Visual Design, Graphic artists, User Experience Designers, Design Developers, including Mobile developers, and Industrial designers.